Detailed server hardware requirements

This topic describes the server hardware requirements for the Pexip Infinity platform:

Host server hardware requirements

The following table lists the recommended hardware requirements for the Management Node and Conferencing Node (Proxying Edge Nodes and Transcoding Conferencing Nodes) host servers.

| Management Node | Transcoding Conferencing Node | Proxying Edge Node | |

|---|---|---|---|

| Server manufacturer | Any | ||

|

Processor make (see also Performance considerations) |

Any |

We recommend 3rd- or 4th-generation Intel Xeon Scalable Series processors (Ice Lake / Sapphire Rapids) Gold 63xx/64xx for Transcoding Conferencing Nodes.

|

Any x86-64 processor which supports at least the AVX instruction set. Most Intel Xeon Scalable Series and Xeon E5/E7-v3 and -v4 processors are suitable. If a mixture of older and newer hardware is available, we recommend using the older or less capable hardware for proxying nodes and the newest or most powerful for transcoding nodes. |

| Processor instruction set | Any | AVX2 or AVX512 (AVX is also supported) | AVX |

| Processor architecture | x86-64 | ||

| Processor speed | 2.0 GHz |

2.6 GHz (or faster) base clock speed if using Hyper-Threading on 3rd-generation Intel Xeon Scalable Series (Ice Lake) processors or newer.

|

2.0 GHz |

| No. of vCPUs |

Minimum 4 |

Minimum 4 vCPU per node Maximum 48 vCPU per node, i.e. 24 cores if using Hyper-Threading

|

Minimum 4 vCPU per node Maximum 8 vCPU per node |

| Processor cache | no minimum | 20 MB or greater | no minimum |

| Total RAM |

Minimum 4 GB (minimum 1 GB RAM for each Management Node vCPU) |

1 GB RAM per vCPU, so either:

|

1GB RAM per vCPU |

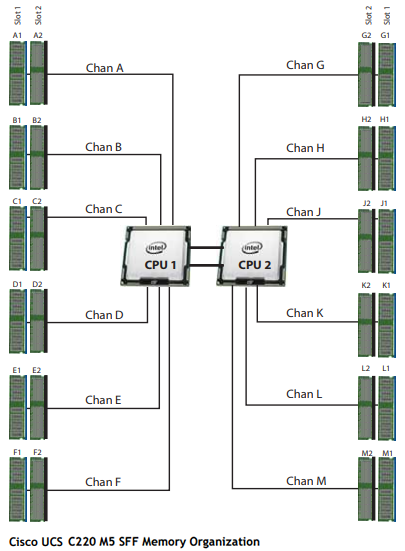

| RAM makeup | Any | All channels must be populated with a DIMM, see Memory configuration below. Intel Xeon Scalable series processors support 6 DIMMs per socket and older Xeon E5 series processors support 4 DIMMs per socket. | Any |

| Hardware allocation | The host server must not be over-committed (also referred to as over-subscribing or over-allocation) in terms of either RAM or CPU. In other words, the Management Node and Conferencing Nodes each must have dedicated access to their own RAM and CPU cores. | ||

| Storage space required | 100 GB SSD |

|

|

|

|

|||

| GPU | No specific hardware cards or GPUs are required. | ||

| Network | Gigabit Ethernet connectivity from the host server. | ||

| Operating System | The Pexip Infinity VMs are delivered as VM images (.ova etc.) to be run directly on the hypervisor. No OS should be installed. | ||

|

Hypervisor (see also Performance considerations) |

Recommended hypervisors:

Supported but not recommended for new deployments:

|

||

|

|

|||

Capacity

The number of calls (or ports) that can be achieved per server in a Pexip Infinity deployment will depend on a number of things including the specifications of the particular server and the bandwidth of each call. For more information, see Capacity planning.

As a general indication of capacity: Servers that are older, have slower processors, or have fewer CPUs, will have a lower overall capacity. Newer servers with faster processors will have a greater capacity. The use of NUMA affinity and Hyper-Threading can significantly increase capacity.

Performance considerations

The type of processors and Hypervisors used in your deployment will impact the levels of performance you can achieve. Some known performance considerations are described below.

Intel AVX2 / AVX512 processor instruction set

Pexip Infinity can make full use of the AVX2 and AVX512 instruction set provided by modern Intel processors. This increases the performance of video encoding and decoding.

The VP9 codec is also available for connections to Conferencing Nodes running the AVX2 or later instruction set. VP9 uses around one third less bandwidth for the same resolution when compared to VP8. Note however that VP9 calls consume around 1.25 times the CPU resource (ports) on the Conferencing Node.

AMD processors

We have observed during internal testing that use of AMD processors results in a reduction of capacity (measured by ports per core) of around 40% when compared to an identically configured Intel platform. This is because current AMD processors do not execute advanced instruction sets at the same speed as Intel processors.

AMD processors older than 2012 may not perform sufficiently and are not recommended for use with the Pexip Infinity platform.

Memory configuration

Memory must be distributed on the different memory channels (i.e. 6 to 8 channels per socket on the Xeon Scalable series, and 4 channels per socket on the Xeon E5 and E7 series).

There must be an equal amount of memory per socket, and all sockets must have all memory channels populated (you do not need to populate all slots in a channel, one DIMM per channel is sufficient). Do not, for example, use two large DIMMs rather than four lower-capacity DIMMs — using only two per socket will result in half the memory bandwidth, since the memory interface is designed to read up from four DIMMs at the same time in parallel

The smaller Intel Xeon Scalable Possessors (Silver and Bronze series) can be safely deployed with 4 memory channels per socket, but we do not recommend the Silver and Bronze series for most Pexip workloads.